AI Business

AI Business

GPUs used in AI datacenters consume a huge amount of electric power. This is a worldwide problem that accelerates global warming. NPUs (Neural Processing Unit, LSIs specialized for AI) are being developed to reduce power consumption. However, power reduction of NPUs would be usually 1/3 ~ 1/5 of GPUs. This level power reduction won’t solve the problem. Floadia’s CiM achieves 1/1000 power and less than 1/10 cost of GPU. Floadia CiM technology would be the final solution for large power consumption of AI systems.

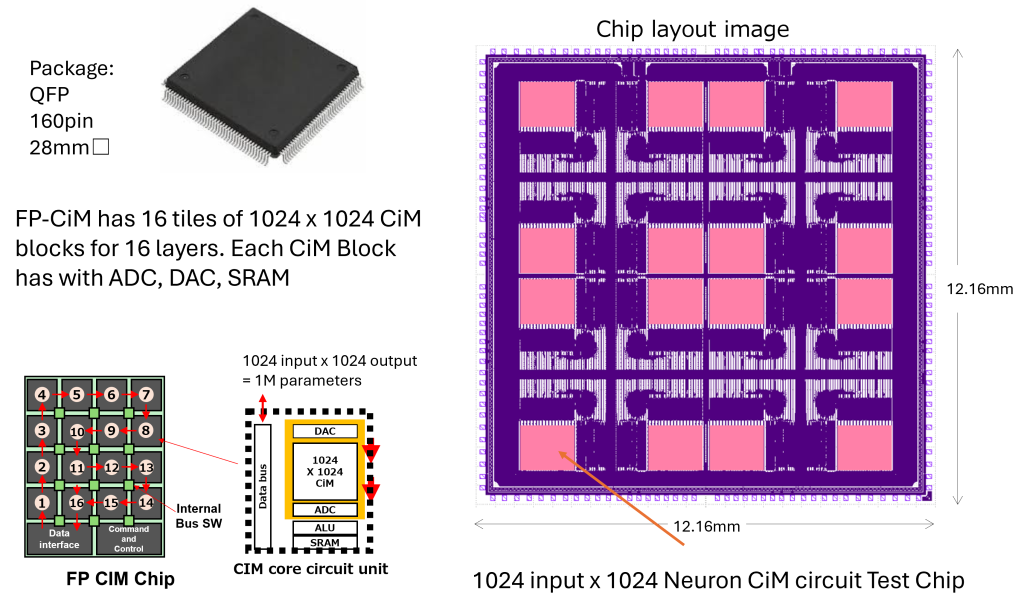

・Less than 1/10 cost

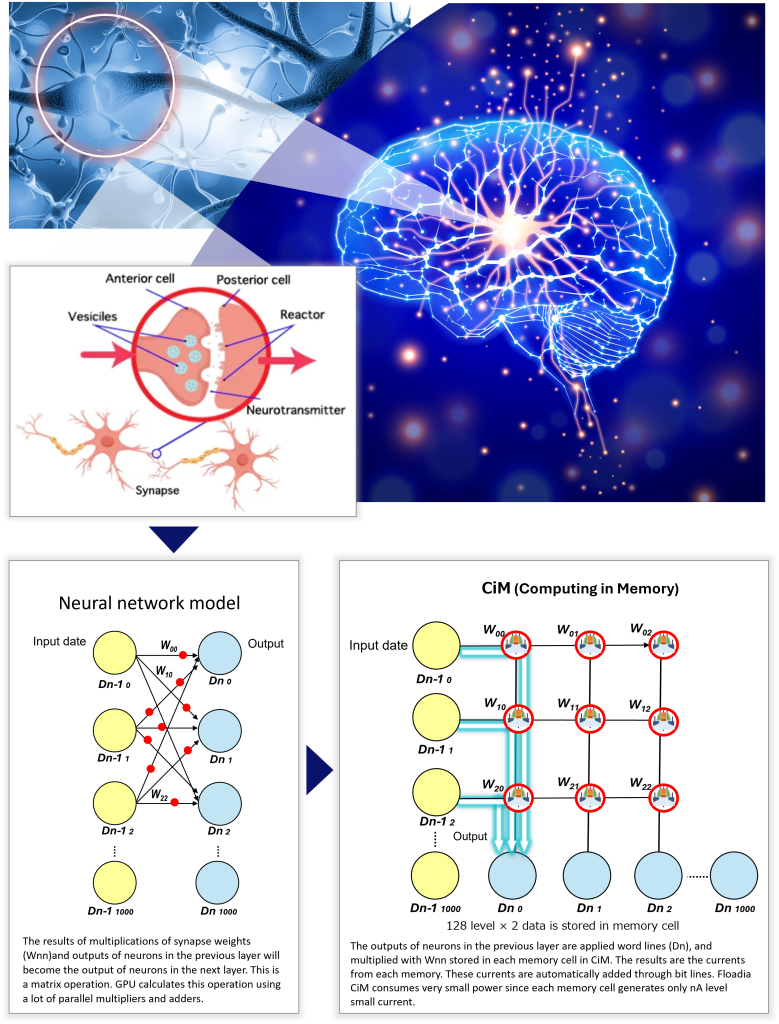

Mechanism of CiM(Computing in Memory)- Calculates in analog circuit efficiently

Different from large scale digital circuit calculation in GPU, CiM calculates product sum calculations in matrix operation in analog way by using analog data stored in flash memory cells and analog inputs applied from word lines. This way is very simple and super low power (about 1/1000 of GPU), lower cost. There are several companies that are developing CiM technology, but no other companies achieved better low power and calculation precision than Floadia because of special flash memory technology. Analog calculation contains errors, but neural networks have mechanisms to cancel errors like human brain. Calculations at human brain contains a lot of errors, but human brain algorithms have mechanisms to cancel errors.

CiM Target Applications

FP-CiM 16 Layer Test Chip

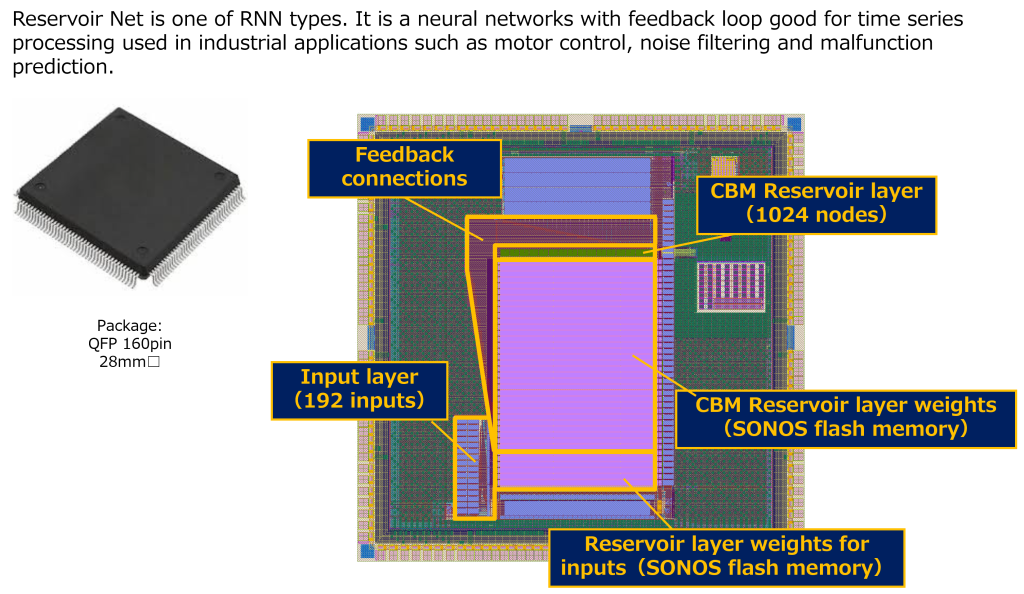

CBM Reservoir Net Test Chip

AI

- Image Recognition

- Voice Recognition

- Anomaly Detection in sensor data

AI Edge computing

- Real Time

- Reduced load on the Cloud

- AI calculation with ultra-low power consumption

Principle of Computing in Memory

Why Floadia?

- More than 100 times more power efficient than competitors (overwhelming TOPS/W)

- Low cost (manufacturing using 40nm-130nm process node)